Human-centric and ethical AI development is about designing technologies that put people first by ensuring fairness, accountability, transparency, and privacy, helping industries like healthcare, finance, education, and autonomous systems grow responsibly while reducing bias, protecting sensitive data, solving ethical dilemmas, and building global trust so that artificial intelligence remains a powerful tool for progress and human empowerment rather than a source of harm.

Rise and Impact of AI

Artificial Intelligence (AI) has transformed from a futuristic concept to an integral part of our daily lives. From the moment we wake up, AI touches many aspects of our routines, whether through smartphone voice assistants like Siri or Alexa, personalized content recommendations on streaming platforms, or smart home devices that automate lighting and temperature. Businesses increasingly rely on AI for tasks such as customer support chatbots, inventory management, and predictive analytics. Its pervasive presence has not only improved efficiency but also reshaped how humans interact with technology, creating a more connected and responsive digital ecosystem.

Need for Human-Centric and Ethical AI

Despite AI’s benefits, unchecked development can lead to significant risks, including biased decision-making, privacy violations, and unintended social consequences. Human-centric AI emphasizes designing systems that prioritize human values, fairness, and transparency. Ethical AI frameworks guide developers to create responsible technologies that respect user privacy, mitigate biases, and ensure accountability. As AI continues to advance, embedding ethics into its core design becomes crucial for fostering trust and promoting societal well-being. Balancing innovation with responsibility ensures that AI remains a tool for human empowerment rather than a source of harm.

Principles of Human-Centric AI

As Artificial Intelligence becomes an integral part of modern life, ensuring that it remains human-centric is more important than ever. Human-centric AI is not just about advanced algorithms and automation—it is about building systems that respect human values, safeguard rights, and promote fairness. At its core, this approach is guided by four essential principles: Transparency, Accountability, Privacy and Data Protection, and Fairness with Bias Mitigation. Together, these principles create AI systems that are explainable, responsible, secure, and inclusive. By embedding these values into AI, we can ensure that technology serves humanity ethically while fostering trust, equity, and long-term social acceptance.

Transparency

Transparency ensures that AI systems operate in ways that are understandable and explainable to users. Explainable AI (XAI) allows stakeholders to comprehend how decisions are made, fostering trust and accountability. For instance, in healthcare, transparent AI can clarify why a particular diagnosis or treatment recommendation was suggested, enabling doctors to validate outcomes. Similarly, financial institutions use transparent AI algorithms to explain loan approvals or credit scoring. By providing clear insights into AI processes, transparency reduces the risk of errors, misuse, and suspicion, ensuring that technology serves humans rather than confusing or misleading them.

Accountability

Accountability in AI development involves clearly defining responsibility for the outcomes of AI systems. Developers, organizations, and policymakers must ensure that AI actions can be traced back to responsible parties. This includes monitoring AI decisions, creating audit trails, and establishing governance policies to handle errors or misuse. For example, autonomous vehicles must have mechanisms to assign accountability in case of accidents. By embedding accountability into AI, organizations demonstrate ethical responsibility, minimize potential harm, and build public confidence in AI technologies, ensuring that innovation does not compromise social or legal obligations.

Privacy and Data Protection

Protecting user data is a cornerstone of human-centric AI. Ethical AI systems prioritize user privacy, collecting only necessary data and securing it against unauthorized access. Compliance with regulations such as GDPR ensures that personal information is handled responsibly. In industries like healthcare and finance, safeguarding sensitive data is critical to maintain trust and prevent exploitation. Techniques such as data anonymization, encryption, and secure storage reinforce privacy measures. By respecting user data rights, AI developers can create systems that are not only effective but also ethical, protecting individuals while still delivering innovative solutions.

Fairness and Bias Mitigation

Fairness requires AI systems to operate without discrimination, ensuring equitable treatment for all users. Bias can arise from skewed training data, algorithmic design flaws, or societal inequalities. Mitigating bias involves using diverse datasets, testing for discriminatory outcomes, and continuously monitoring AI performance. For instance, recruitment algorithms must avoid favoring certain demographics, and loan approval systems should treat applicants equitably. By addressing fairness proactively, AI becomes more inclusive, trustworthy, and aligned with human values, reducing the risk of harm and fostering social acceptance of technology.

Ethical AI Development Practices

Building truly ethical and human-centric AI requires more than just technical innovation—it demands practices that prioritize inclusion, accountability, and long-term responsibility. From designing systems that serve diverse communities to ensuring continuous monitoring, stakeholder collaboration, and sustainability, ethical AI development integrates values with technology. These practices not only reduce risks such as bias, unfair treatment, or misuse but also enhance trust, accessibility, and social impact. By embedding these principles into every stage of AI development, organizations can create intelligent systems that advance innovation while respecting human dignity and protecting the planet.

Inclusive Design

Inclusive design is a cornerstone of human-centric AI. It ensures that AI systems serve diverse user groups, considering different cultural, social, and accessibility needs. By incorporating perspectives from various communities during development, AI solutions avoid unintended discrimination and promote fairness. For example, voice recognition systems trained on diverse accents and languages improve accuracy and usability for everyone. Inclusive design also enhances user experience, making AI more accessible and trusted. Prioritizing inclusion at every stage of development ensures that ethical AI principles are embedded from the ground up, creating systems that respect human diversity.

Continuous Monitoring and Evaluation

Continuous monitoring and evaluation are essential for maintaining ethical AI standards. AI systems must be regularly audited to detect biases, errors, and performance issues. For instance, predictive algorithms in finance or healthcare should be continuously reviewed to ensure fairness and reliability. Automated alerts, performance dashboards, and periodic human reviews help identify risks early. By implementing robust monitoring practices, organizations can ensure that human-centric AI systems remain accountable, transparent, and aligned with ethical guidelines, while adapting to evolving user needs and data patterns.

Collaboration Between Stakeholders

Collaboration among developers, ethicists, policymakers, and end-users strengthens ethical AI practices. Multidisciplinary teams help identify potential risks, design mitigation strategies, and ensure that AI aligns with societal values. For example, AI in healthcare benefits from input by doctors, patients, and regulatory authorities to guarantee safe and fair outcomes. Transparent communication among stakeholders also fosters trust and ensures that decisions are well-informed. By promoting cooperative development, organizations create human-centric AI systems that are socially responsible, accountable, and capable of positively impacting diverse communities.

Sustainability Considerations

Sustainability is increasingly recognized as a key aspect of ethical AI. Developing energy-efficient AI models reduces environmental impact, ensuring that innovation does not come at the cost of ecological harm. Techniques such as model optimization, use of renewable energy, and minimizing computational waste contribute to sustainable practices. Companies adopting human-centric AI principles also consider long-term societal benefits, balancing technological progress with environmental stewardship. By integrating sustainability into AI development, organizations uphold ethical standards while fostering responsible innovation that supports both humans and the planet.

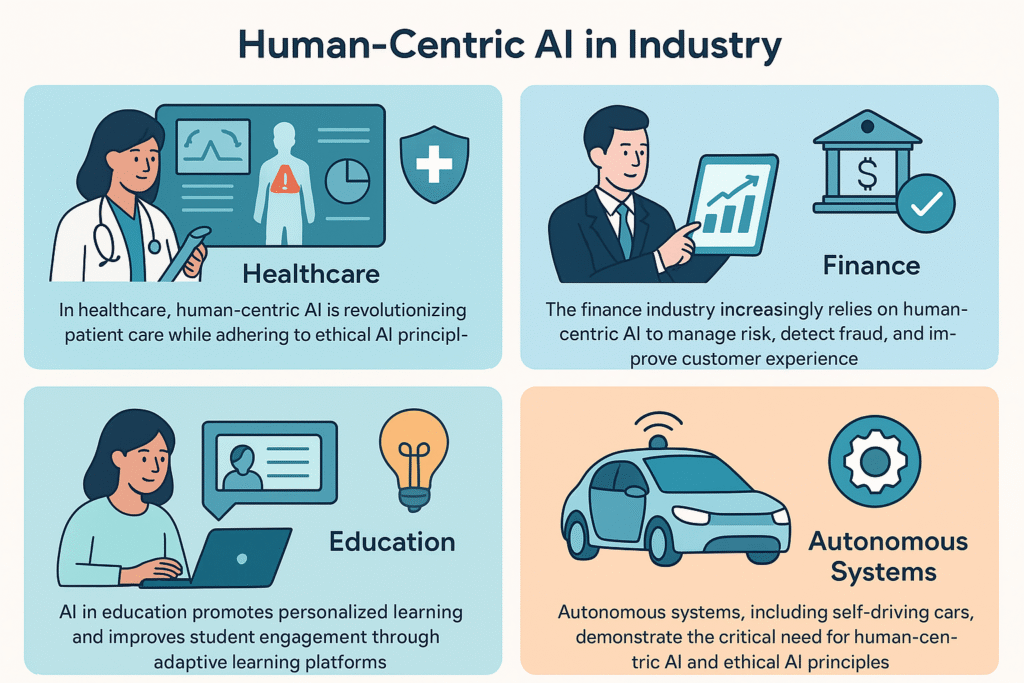

Human-Centric AI in Industry

Artificial Intelligence (AI) is transforming industries worldwide, reshaping the way humans interact with technology. From improving healthcare outcomes to streamlining financial services, enhancing education, and powering autonomous systems, AI continues to unlock new possibilities. However, true progress lies not only in innovation but also in ensuring that AI remains human-centric—built on ethics, transparency, and accountability. By focusing on fairness, safety, and inclusivity, human-centric AI ensures that technological growth serves society while protecting human values and well-being.

Healthcare

In healthcare, human-centric AI is revolutionizing patient care while adhering to ethical AI principles. AI-driven diagnostic tools, predictive analytics, and personalized treatment plans enhance medical outcomes. For instance, AI algorithms can detect early signs of diseases such as cancer or diabetes, assisting doctors in timely intervention. Ensuring transparency and accountability in these systems is crucial to maintain trust between patients and medical professionals. Privacy protection also plays a key role, as sensitive health data must be securely handled. By integrating ethical considerations, healthcare AI not only improves efficiency but also prioritizes human well-being.

Finance

The finance industry increasingly relies on human-centric AI to manage risk, detect fraud, and improve customer experience. AI-powered credit scoring, investment recommendations, and transaction monitoring provide efficiency and accuracy. Ethical AI practices ensure fairness in loan approvals and prevent biased decisions against specific demographic groups. Transparency and accountability mechanisms allow financial institutions to explain AI-driven decisions to customers, fostering trust. By combining innovative technology with ethical safeguards, AI in finance balances operational efficiency with social responsibility, ensuring equitable treatment for all stakeholders.

Education

AI in education promotes personalized learning and improves student engagement through adaptive learning platforms. Human-centric AI ensures that content recommendations, assessments, and feedback consider individual needs, abilities, and learning styles. Ethical AI safeguards student privacy, preventing unauthorized use of personal information. Inclusive design also guarantees accessibility for students with disabilities or from diverse backgrounds. By focusing on transparency and fairness, educational AI systems support teachers and students alike, creating an equitable and effective learning environment while upholding ethical standards in technology deployment.

Autonomous Systems

Autonomous systems, including self-driving cars and industrial robots, demonstrate the critical need for human-centric AI and ethical AI principles. These systems must operate safely, predictably, and fairly, minimizing risks to humans. Accountability mechanisms, such as logging decision-making processes and fail-safe protocols, are essential to address potential errors. Transparency in system behavior helps stakeholders understand AI actions and build trust. By prioritizing safety, fairness, and responsibility, autonomous systems can achieve their full potential while ensuring that technological advancement aligns with human values and societal well-being.

Challenges and Solutions

Bias and Discrimination

One of the primary challenges in human-centric AI is bias, which can lead to unfair or discriminatory outcomes. Bias often emerges from skewed training data, flawed algorithms, or societal inequalities reflected in datasets. Ethical AI frameworks help identify and mitigate these biases by employing diverse datasets, continuous auditing, and fairness-aware algorithms. For example, recruitment or loan approval systems must be regularly tested to prevent discriminatory decisions. By proactively addressing bias, developers ensure that AI systems remain equitable, transparent, and aligned with human values, building trust among users and stakeholders.

Security Risks

Security is another critical challenge in AI development. Sensitive data used by AI systems, such as personal health or financial information, is vulnerable to breaches and adversarial attacks. Implementing robust cybersecurity measures, encryption, and access control protocols is essential to protect users. Additionally, monitoring for potential misuse or manipulation of AI algorithms ensures continued reliability and integrity. Integrating security considerations into human-centric AI practices guarantees that ethical principles are upheld while safeguarding sensitive data, maintaining accountability, and minimizing risks to individuals and organizations.

Ethical Dilemmas

Ethical dilemmas arise when AI systems face situations that involve conflicting human values or life-critical decisions. Examples include autonomous vehicles deciding between safety priorities or AI in healthcare recommending treatment under uncertainty. Ethical AI approaches, such as implementing transparent decision-making frameworks, stakeholder collaboration, and value-sensitive design, help navigate these dilemmas. By establishing clear guidelines and accountability, AI developers can create human-centric AI systems that are responsible, trustworthy, and socially aligned, ensuring technology supports humanity without causing unintended harm.

Future of Human-Centric AI

The future of human-centric AI lies in innovation that balances intelligence with responsibility. From emerging trends like explainable AI, fairness checks, and privacy-preserving techniques to global collaboration that sets common ethical standards, the path forward emphasizes trust, accountability, and inclusivity. By aligning technology with human values and fostering worldwide cooperation, AI can evolve into a force that empowers people, respects diversity, and drives sustainable progress.

Emerging Trends

The future of human-centric AI is shaped by innovations that prioritize ethical considerations and societal well-being. Emerging trends include explainable AI (XAI), which provides transparency in decision-making, and AI governance frameworks that ensure accountability. Advances in natural language processing, computer vision, and predictive analytics are being aligned with ethical AI principles to create systems that are not only intelligent but also responsible. Developers are increasingly incorporating value-sensitive design, fairness checks, and privacy-preserving techniques to mitigate risks associated with AI deployment. By integrating these trends, future AI technologies can enhance human decision-making, improve accessibility, and foster trust among users while maintaining alignment with human values.

Global Collaboration

Global collaboration is essential to shape the future of human-centric AI. International cooperation among policymakers, researchers, and industry leaders helps establish common standards for ethical AI deployment. Initiatives such as AI ethics boards, cross-border data protection agreements, and shared best practices promote responsible innovation worldwide. Collaboration also ensures that AI systems consider diverse cultural, social, and economic perspectives, reducing bias and promoting fairness globally. By fostering a cooperative ecosystem, countries and organizations can collectively address challenges such as privacy, security, and ethical dilemmas. A future built on collaboration ensures that AI technologies remain transparent, accountable, and aligned with human values, empowering humanity rather than creating unintended harm.

Really insightful blog! The way you explain different tools makes it so easy to understand and apply. Looking forward to your next post

Nice blog.

Awesome blog Your content is super informative and engaging. I’m definitely going to bookmark it and come back for more. Keep up the fantastic work